December is not even halfway over and already we have two big developments on the AI housing policy front. I'm sure many of us have seen the buzz on the Freddie Mac AI bulletin and the executive order. Here's my take on what it means for us in mortgage.

The first kind of interesting thing is the lack of mention of generative artificial intelligence. This implication here is the more traditional definition of artificial intelligence applies - my definition of artificial intelligence is that very broad field of computer science focused on enabling machines to perform tasks that typically require human intelligence.

One of the most interesting things about the bulletin is that that is does NOT say anything about use cases. It does obligate seller/servicers to furnish documentation on the types of AI/ML used, as well as the "purpose and manner" for such use - but it falls short of saying the industry must provide a use case list. Look here for a mortgage AI use case list. Look here and here for a description of AI types, especially those prevalent in mortgage. This is interesting to me because I think the use case list is kind of where the rubber meets the road in mortgage AI, I also think it's one place for differentiation in a mortgage companies' AI strategy. I appreciate that this was not called for as I think it's very special to each organization.

Another very interesting thing about the bulletin is the extent to which Freddie Mac's own technology will show up. Freddie Mac, of course, makes extensive use of AI/ML systems in its own stack so naturally this will show up in the documentation. I wonder if this means that seller/servicers will also have to test Freddie Mac technology for adherence to Freddie Mac's requirements.

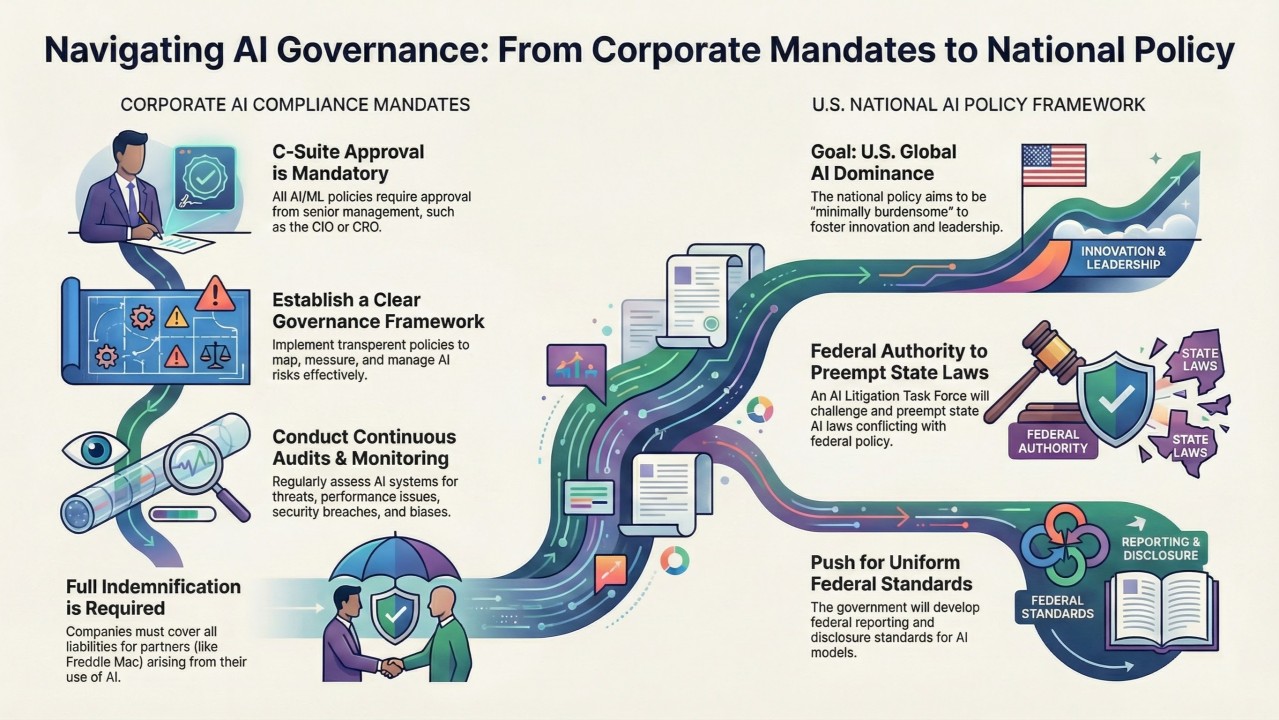

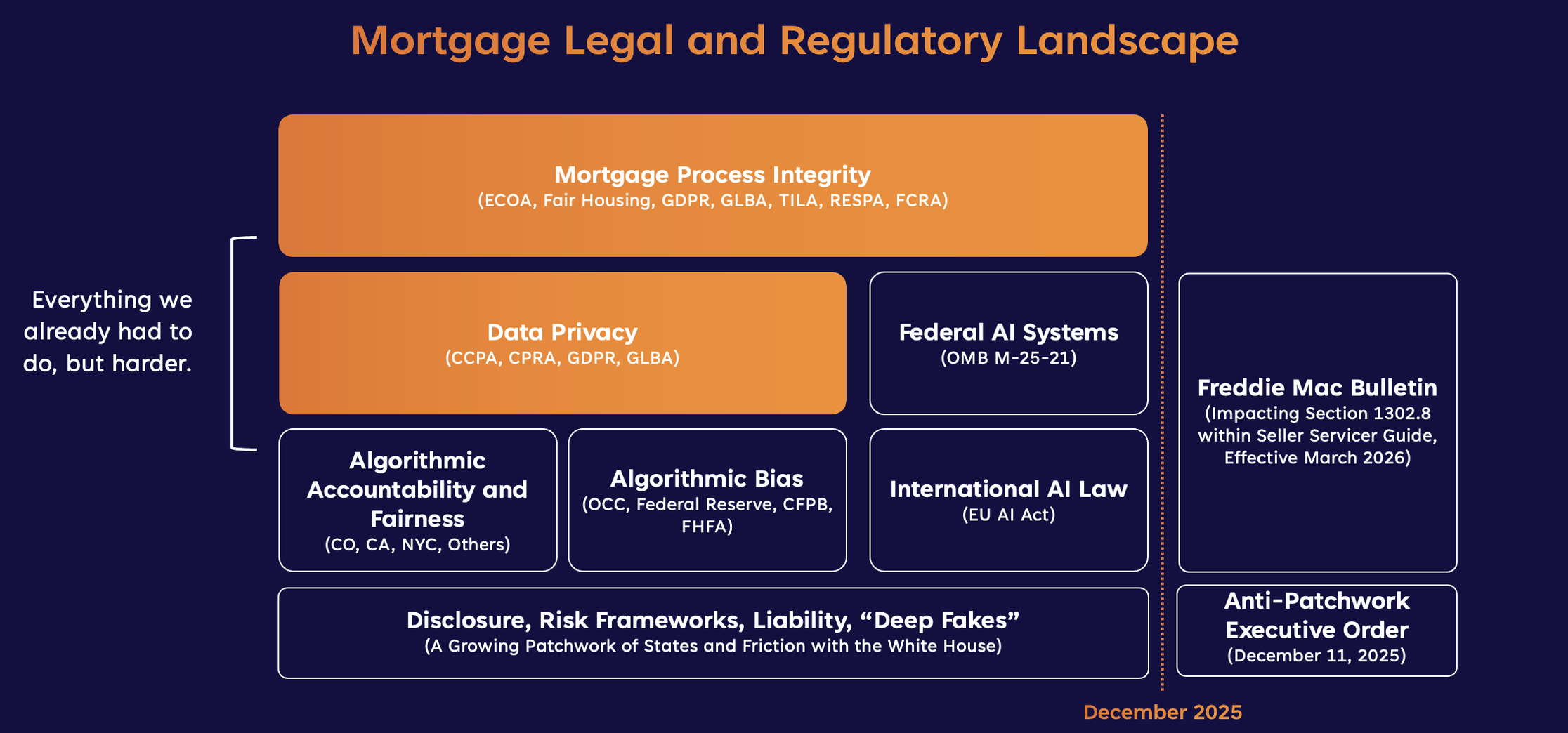

Also interesting is the following statement "[l]egal and regulatory requirements involving AI are understood, managed, and documented" - I really wish this was easy. We have what we have "always" had to do from a process integrity and data privacy perspective, then we have the patchwork of state requirements (not new to us in mortgage). There is no decoder ring for the rules, and what I generally say is that we have to anticipate where the puck is going to go. I'm happy to see Freddie Mac give us a less gelatinous sense of the puck's destination. Continue reading for my take on the executive order that could change all this.

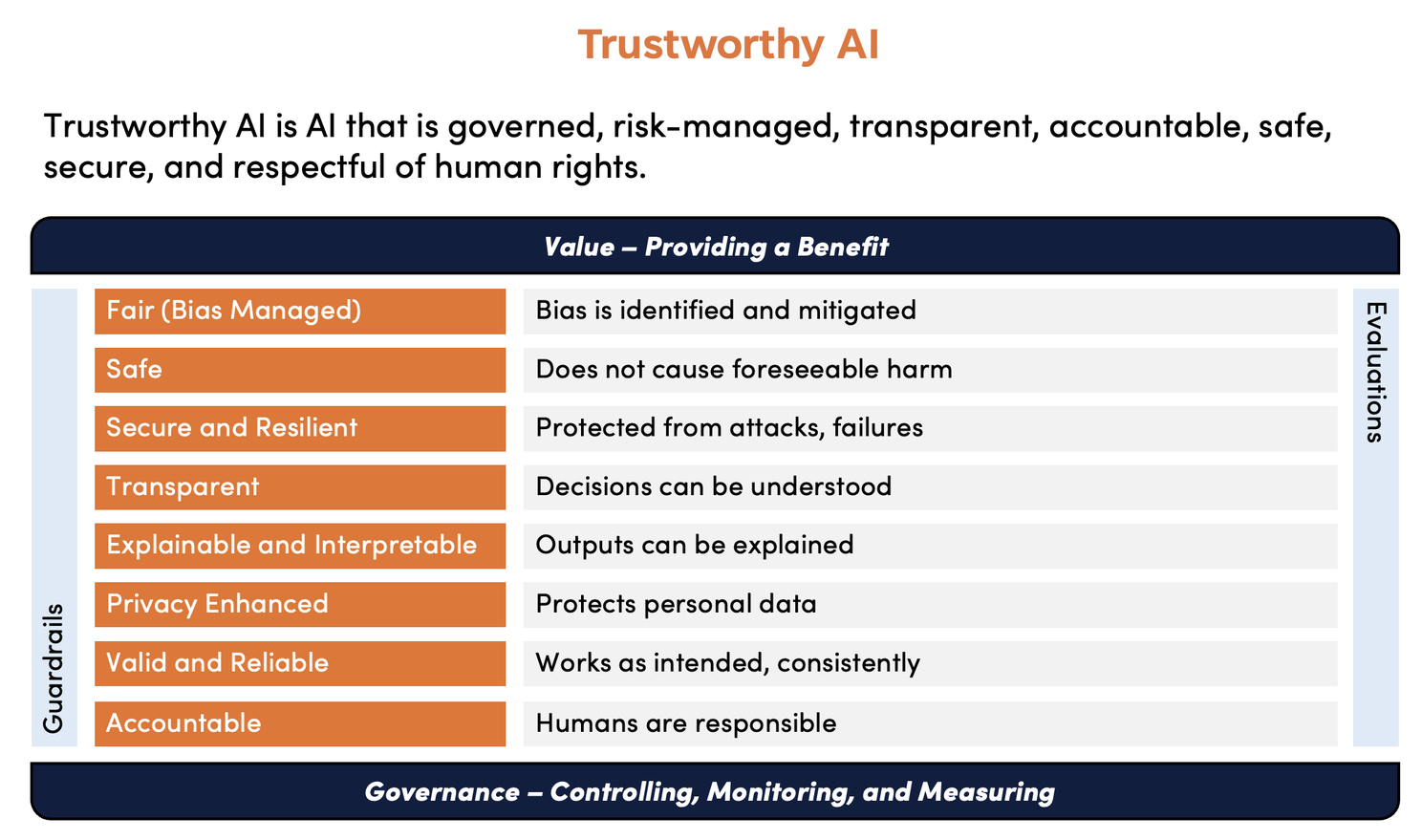

I found the use of the term "trustworthy AI" as opposed to "responsible AI (RAI)" interesting. Upon further review this certainly harkens back to the NIST definition, which is very close to the RAI framework we teach in our classes. I will start using this term and framework instead. In terms of the trustworthy AI framework defined by NIST, please keep in mind that guardrails and evaluations are a central foundation of a good implemented framework.

Guardrails are technical and non-technical measures implemented to prevent unsafe, unethical, and unreliable use in production environments. Evaluations measure how well generative AI performs against expectations, helping to ensure outputs are accurate, relevant, and aligned with user goals.

The last thing in the bulletin I found interesting was the segregation of duties language. I'll be honest, I hadn't really thought about this before. In context it makes sense. This language is trying to prevent a very specific failure mode. Specifically, it's designed to help ensure that the people who benefit from using an AI system are not also the people who define the risk, “measure” the risk, and sign off that the risk is acceptable. Makes sense.

Definitely the most interesting thing about the executive order (to me, anyway) was the statement that the administration intends to "initiate a proceeding to determine whether to adopt a Federal reporting and disclosure standard for AI models that preempts conflicting State laws". Personally, I would welcome a set of requirements for appropriate use of AI systems. Right now, it's really hard to know what to do. Anticipating where the puck is going to go puts a major damper on innovation. Many companies are paralyzed by the lack of clarity, which stymies creativity. I don't think it would be so bad to have a decoder ring. I acknowledge that I may regret this statement in the future.

Also interesting to me is the extent to which these two sets of guidance were released in coordination with each other. I have to think they were reviewed in concert. One does not conflict with the other, but if the Freddie Mac bulletin had been produced by a state government, I wonder how it would be reviewed under the executive order.

Nothing really to do here except watch and wait. Of course, it's a great idea to keep the lines of communication open with your state examiners and regulators, see what they are doing and thinking about it. Until something changes, there are about 150 distinct AI-related laws, ordinances, and legislative proposals out there that we should understand and determine for ourselves if they apply to us.

By Tela G. Mathias, Chief Nerd and Mad Scientist at PhoenixTeam, CEO at Phoenix Burst